Hello there. This is the 3rd in a series documenting the development of my browser-based, graphical game prototyping engine, Game Painter, but you don’t need to read the previous entries to read this. Today I wanted to talk about the high-level design ideas that motivated the project, so here goes.

Direct Manipulation User Interfaces and Feedback Loops

When I was coming up with Game Painter, I wanted to make an analogue to Increpare’s PuzzleScript, a really great programming environment for prototyping puzzle games. Except where PuzzleScript uses a scripting language as the human-computer interface, Game Painter would feature a graphic interface where users would interact with games directly rather than through code.

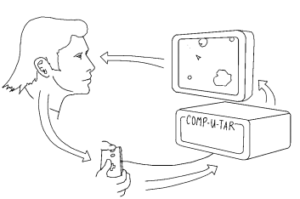

I wanted to make a user interface that encouraged improvisation and creation by reaction, by which I mean the following. The programmer takes an action. The computer processes that action and then responds by displaying something to the screen. This causes the programmer to think for a moment and take another action in response. What I’ve described is exactly what we talk about when talk about what it means to play a video game. To play a video game is to be in a feedback loop with the computer.

Image from the book Game Feel by Steve Swink

Ironically, the experience of programming games is very far removed from the experience of playing them. When you code, you’re at a remove from the thing you’re programming. You’re removed by time (it takes time to compile and run code; you don’t get instantaneous feedback). You’re removed by senses (you can’t see, feel, hear, or touch what you’re making; you have to imagine it, which is error-prone). This seems to be an area where direct manipulation interfaces has an advantage over conventional, text-based interfaces.

On the other hand, text has its own advantages over direct manipulation. It’s lightweight. It’s composable. Perhaps most importantly, it’s inherently symbolic; therefore it’s easy to express arbitrary abstractions in text. I wonder to what extent direct manipulation interfaces could have the same abstractive power. In my experience, it feels harder to wring out useful abstractions from a direct manipulation interface than a textual one, but perhaps that’s simply because it’s an unsolved problem awaiting a future solution. As a bit of a side note, because of their various trade-offs, I think the borderline between direct, graphical interfaces and indirect, symbolic interfaces could be a very fruitful one.

Analogy in Programming Environments

So it was important to me to create a feedback loop between human and computer.

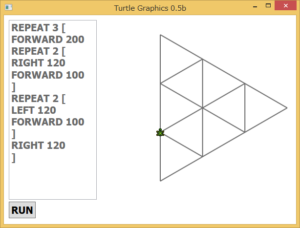

I was inspired by other programming environments that use metaphor as a bridge between human and computer. For example, in Logo, users program a “turtle”. The turtle acts as a point of contact between the mental model of the user and the mental model of the computer. The user can identify with the point of view of the turtle, which is a starting point toward understanding how the system works as a whole.

Screenshot of a programming environment derivative of Logo

In Game Painter, the metaphor is of painting, and I try to reinforce it wherever I can. Users draw from palettes to choose different colors. They paint rule canvases to create programs. And they paint sprite and level canvases to create the data that their programs act on. While Game Painter offers no avatar to mentally latch on to, the painting metaphor tells the user what sort of actions they can take to interact with the system.

Seeing what the Computer is Thinking

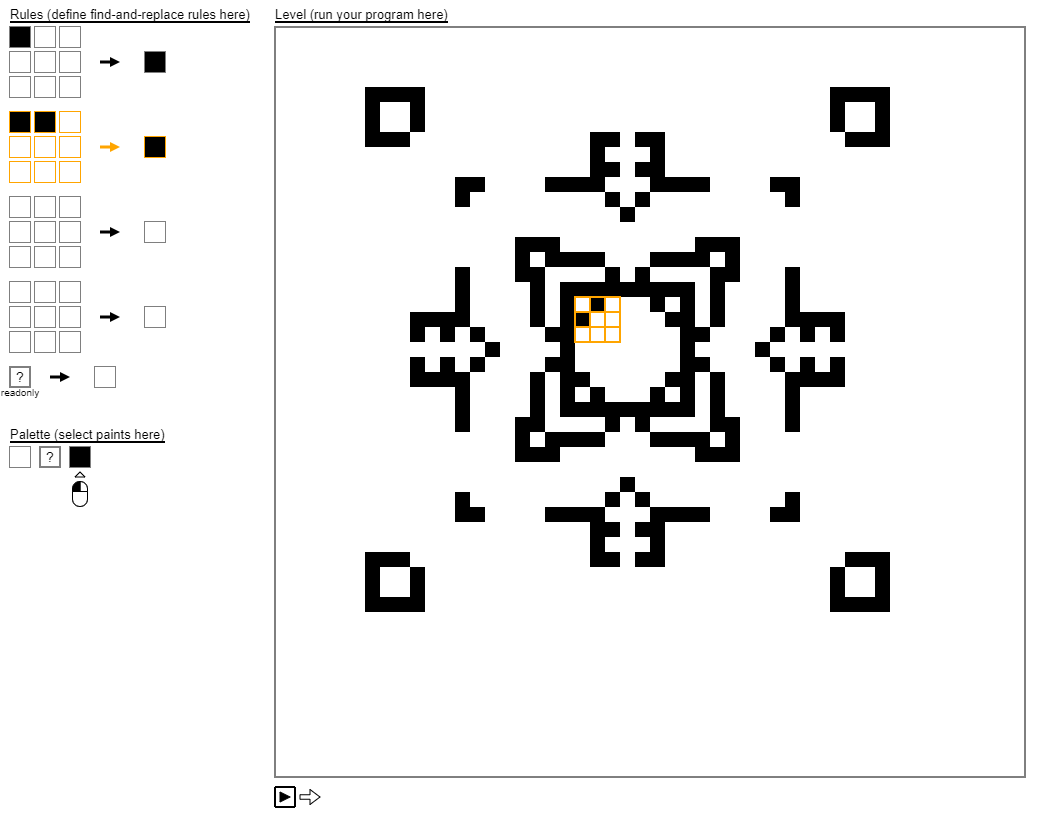

Metaphor alone is not sufficient to bridge the gap between human and computer. Usability needs to be considered. There needs to be a way for the user to see what the computer is thinking, which is an idea I took from the essay Learnable Programming by Bret Victor. This is something I’ve just started to work on.

This screenshot shows a new feature where the user can mouse over a cell in the level and the computer will show information about what it plans to do with that cell and why by highlighting relevant features of the GUI. In this case, when a user mouses over a cell in the level canvas (the canvas to the right), it highlights a local neighborhood in the level along with a rule canvas (the canvases to the left) that matches that local neighborhood.

Hopefully, this can start to build a picture in the user’s mind about how different parts of the system relate to one another and form a coherent whole. Who knows how far this will go to help the user understand what the computer’s thinking, but it’s a start, and it’s ultimate effectiveness is something for user testing to suss out.

This concludes this entry in the dev log. If you want to keep up with the project, follow me on twitter @objstothinkwith. Thanks for reading!